KEY LEARNINGS

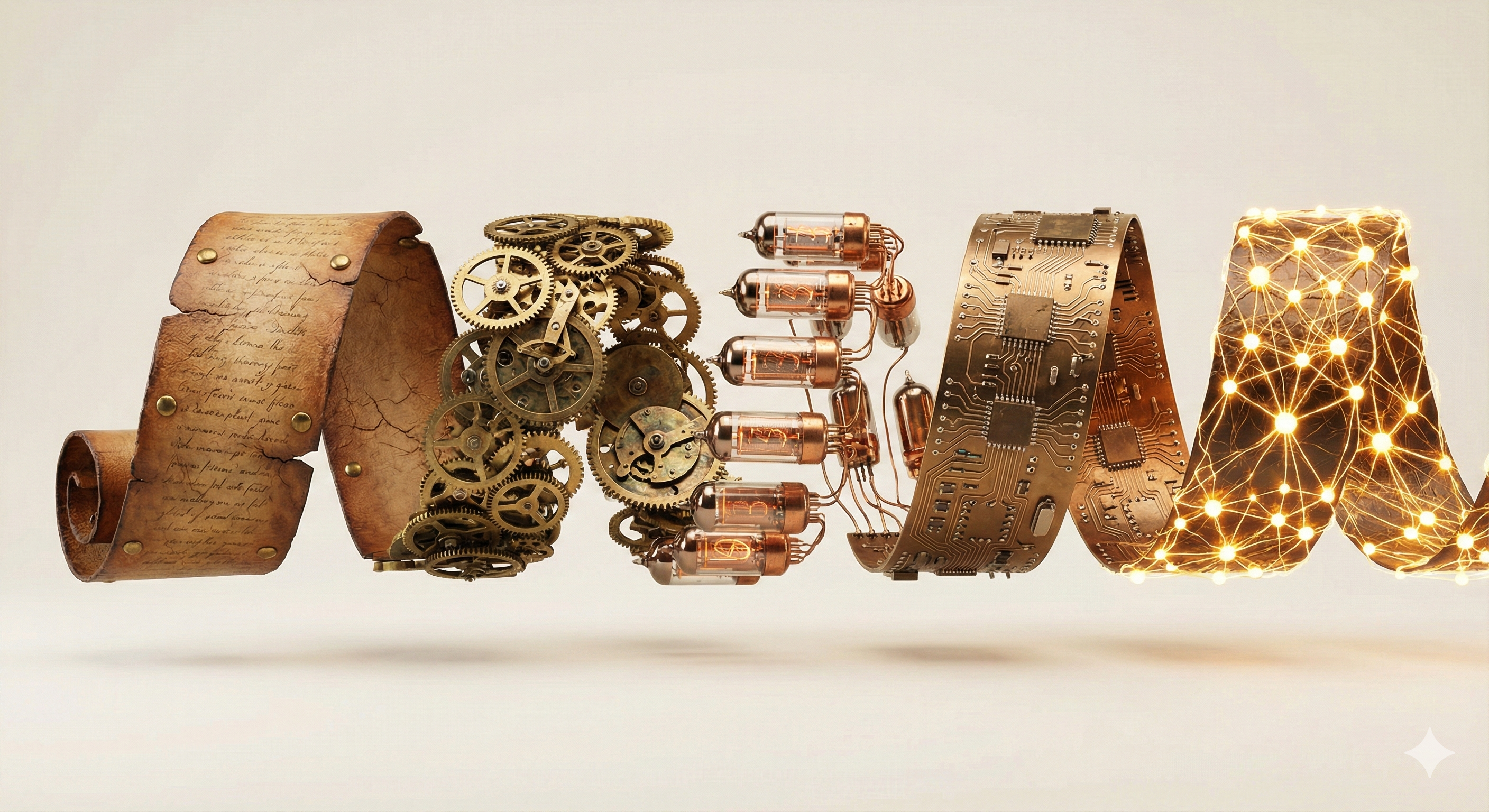

- The AI stack consists of five interdependent layers: Hardware, Infrastructure, Platforms, Models, and Applications.

- Hardware concentration is a critical risk, with NVIDIA controlling approximately 80-90% of the AI chip market.

- Understanding the difference between training (one-time creation) and inference (ongoing use) is vital for cost forecasting.

- Many user-facing AI applications are actually 'thin wrappers' that rely entirely on third-party foundation models.

- Effective governance requires mapping data flows and dependencies through every layer of the stack, not just the application layer.

- 🌐NVIDIA: AI Data Center Reference ArchitectureTechnical reference for enterprise AI infrastructure.

- 🌐Google Cloud: TPU vs GPU for AIComparison of hardware options for AI workloads.

- 📄Stanford HAI: AI Index Report 2024Comprehensive analysis of compute trends in AI.

- NVIDIA. (2024). Data Center Products and Hardware Specifications.

- Epoch AI. (2024). Trends in Machine Learning Hardware.

- Menlo Ventures. (2024). The Modern AI Stack: Design Principles.