KEY LEARNINGS

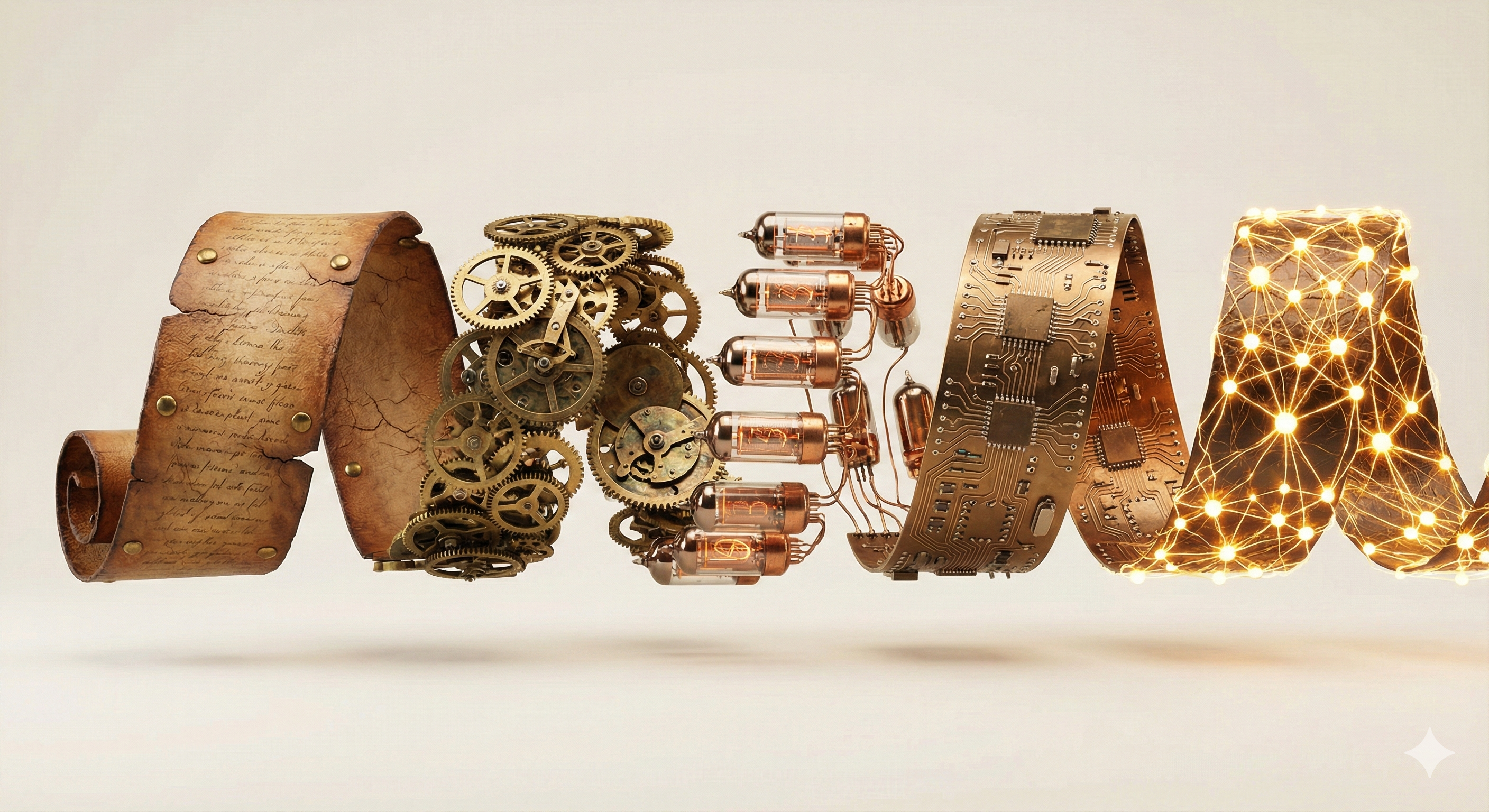

- Foundation models represent a paradigm shift from training specialized models for every task to adapting one massive model for many tasks.

- The creation of these models involves two distinct phases: expensive 'pre-training' on broad data and cheaper 'fine-tuning' for specific needs.

- Transfer learning allows these systems to apply knowledge learned from reading the internet to unrelated tasks like summarizing legal briefs.

- A critical governance risk is 'homogenization,' where errors or biases in a single foundation model propagate to thousands of downstream applications.

- Selecting a foundation model requires rigorous due diligence on training data, safety testing, and provider terms, not just performance metrics.

- 📄Stanford CRFM: On Foundation ModelsComprehensive paper on foundation model opportunities and risks.

- 🌐EU AI Act: General-Purpose AI ModelsEU regulatory approach to foundation models.

- 📄NIST: Generative AI ProfileNIST guidance on generative AI governance.

- Bommasani, R., et al. (2021). On the Opportunities and Risks of Foundation Models. Stanford HAI.

- OpenAI. (2023). GPT-4 Technical Report.

- European Parliament. (2024). Regulation (EU) 2024/1689 (EU AI Act).