KEY LEARNINGS

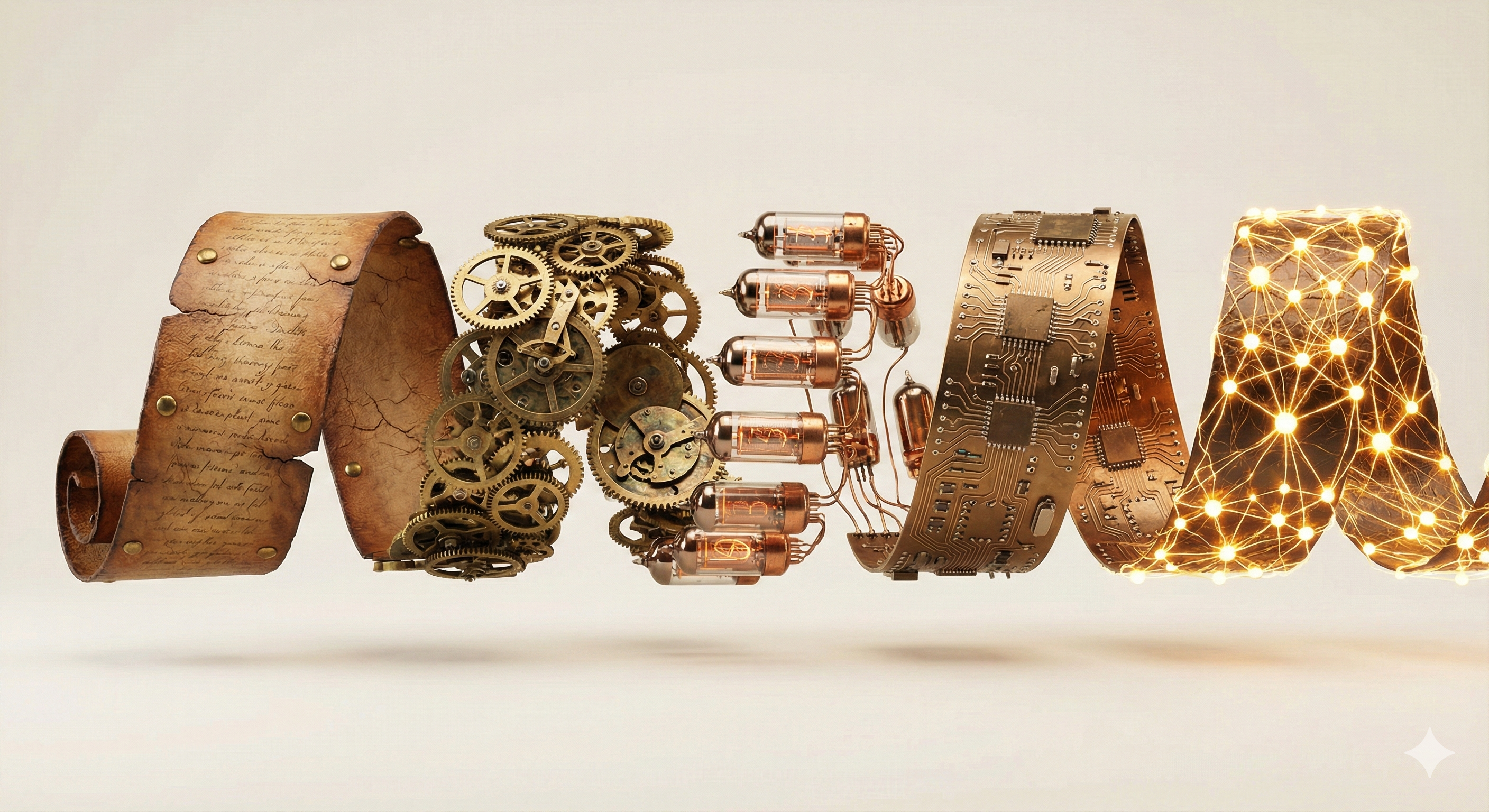

- Multimodal AI systems can process and generate multiple types of data—text, images, audio, and video—simultaneously.

- These models work by converting different sensory inputs into 'embeddings,' a shared mathematical language that allows the AI to relate words to images.

- The capability to 'see' introduces novel security risks, such as visual prompt injection, where malicious commands are hidden inside images.

- Generative multimodal systems exacerbate trust issues, necessitating provenance standards like C2PA to verify content authenticity.

- Governance must evolve to address biometric privacy risks, as systems that analyze images can inadvertently identify individuals.

- 📄OpenAI: GPT-4V System CardTechnical safety documentation for GPT-4 with vision.

- 🌐C2PA Technical SpecificationOfficial content provenance standard documentation.

- 📄Anthropic: Claude 3 System CardSafety documentation for multimodal AI system.

- OpenAI. (2023). GPT-4V(ision) System Card.

- Coalition for Content Provenance and Authenticity (C2PA). (2023). Technical Specification.

- NIST. (2024). Synthetic Media Detection: Technical Approaches.